Canadian Politician Charged with Threatening Rival, Blames AI for Voicemail

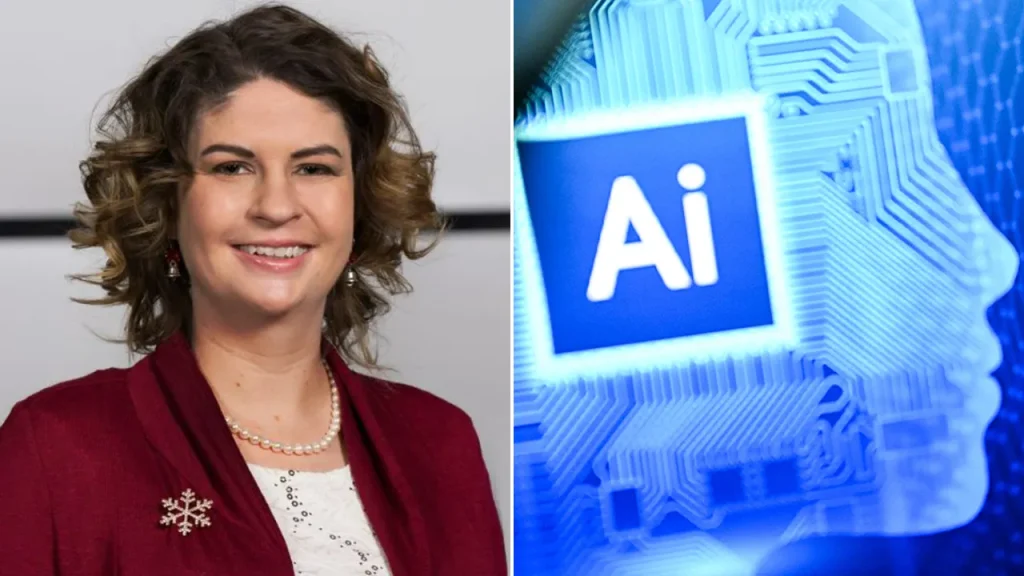

In a stunning twist of political drama in Ontario, Canada, local councilor Corinna Traill has been arrested and charged with making threats against a potential mayoral candidate, marking a troubling intersection of local politics and claims about artificial intelligence technology. Traill, who had served her community for over a decade, now faces two counts of uttering threats following allegations that she left a disturbing voicemail for former mayoral hopeful Tom Dingwall last August. The Peterborough Police Service confirmed her arrest on Wednesday, releasing her on her own recognizance with a court appearance scheduled for January. The case raises serious questions about the boundaries of political rivalry and the emerging use of AI as an excuse in alleged misconduct.

The controversy erupted publicly in September when Dingwall shared his disturbing allegations on Facebook. According to his post, Traill had left him a voicemail instructing him not to run for mayor so that her friend could run unopposed. However, the message allegedly took a sinister turn, with Dingwall claiming that Traill threatened to “come to my home, kill me, and sexually assault my wife, then sexually assault her again.” Dingwall’s public call for Traill’s resignation emphasized that no elected official should use “intimidation or threats to dissuade anyone from pursuing elected office or engaging in public service,” highlighting fundamental concerns about democratic processes and civil governance in local politics. His allegations struck at the heart of ethical expectations for those who serve in public office.

In response to these serious accusations, Traill issued her own statement on Facebook firmly denying responsibility for the threatening voicemail. Her defense took a technological twist as she claimed, “I want to state clearly and unequivocally: I did not create this message. I have been advised that artificial intelligence technology was involved. Portions of the voicemail were my voice, but other parts were artificially generated.” This assertion represents one of the first prominent cases where a public figure has attributed threatening communications to AI manipulation, suggesting that someone had used her voice as a template to create fabricated threats. Traill indicated that her team was investigating to discover who might have created the alleged AI-generated message, positioning herself as a victim of technological deception rather than a perpetrator of threats.

The case emerges against the backdrop of growing concerns about AI’s potential to create convincing deepfakes and voice clones that can be misused for harassment, fraud, or political sabotage. With tools becoming increasingly accessible that can simulate a person’s voice with remarkable accuracy, the boundary between authentic and artificially generated communications is increasingly blurred. This technological context adds complexity to the investigation, potentially requiring forensic audio analysis to determine whether the message in question shows signs of AI manipulation or represents a genuine recording. Law enforcement agencies worldwide are still developing protocols for handling evidence in cases where AI generation is claimed, making this case potentially precedent-setting in how such defenses are evaluated in legal proceedings.

Despite Traill’s technological defense, police investigators evidently found sufficient grounds to proceed with criminal charges, suggesting they may have evidence countering the AI generation claim. The charge of “uttering threats” in the Canadian legal system carries serious potential consequences, reflecting the gravity with which threats of violence and sexual assault are treated, regardless of whether they were intended to be carried out. The case has shaken the local political landscape, with community members expressing shock that a long-serving councilor could be implicated in such serious allegations. The controversy also highlights the intense and sometimes deeply personal nature of local political rivalries, which can escalate beyond professional disagreement into concerning territory.

As this case proceeds through the legal system, it will likely attract attention far beyond Ontario, serving as a cautionary tale about political conduct and potentially establishing important precedents about AI-related defenses in criminal proceedings. For the community served by Traill, the situation represents a profound betrayal of public trust if the allegations prove true. For Dingwall, who chose to make the threats public, the case validates his decision to speak out about alleged intimidation. And for the broader public, it serves as a sobering reminder of both the fragility of civil political discourse and the new complications that emerging technologies bring to questions of evidence and accountability. Whether Traill’s defense ultimately proves credible or not, this case demonstrates that the intersection of politics, technology, and personal conduct will continue to present novel challenges for legal systems and democratic institutions in the years ahead.