Microsoft’s Maia 200 Signals New Era in Cloud AI Chip Wars

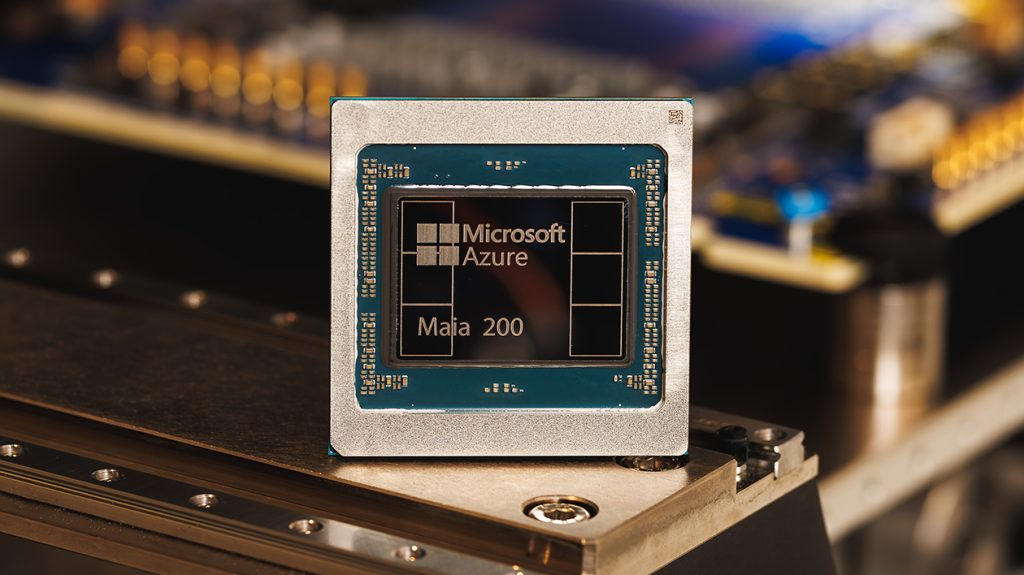

Microsoft has unveiled its second-generation custom AI chip, the Maia 200, positioning itself as a serious contender in the increasingly competitive cloud AI silicon landscape. Already running in Microsoft’s Iowa data center near Des Moines, the new chip boasts impressive performance claims—reportedly delivering three times the performance of Amazon’s latest Trainium chip on certain benchmarks while exceeding Google’s most recent tensor processing unit on others. This significant leap forward comes less than three years after Microsoft first revealed its custom chip ambitions with the Maia 100 in late 2023. What makes this development particularly noteworthy is how quickly Microsoft has closed the gap with competitors like Google, who have been refining their TPUs for nearly a decade, and Amazon, whose Trainium line is already advancing to its fourth generation. The Maia 200 isn’t just a technological showcase—it’s already handling critical workloads, powering OpenAI’s GPT-5.2 models, Microsoft 365 Copilot, and internal projects from Microsoft’s Superintelligence team, with a second deployment planned near Phoenix.

The rise of custom silicon among cloud giants reflects a fundamental shift in how these companies approach the economics of artificial intelligence. While Nvidia has dominated the AI chip market with its powerful GPUs, the astronomical costs associated with training and serving advanced AI models have pushed Microsoft, Google, and Amazon to develop their own specialized hardware. The financial calculus is straightforward but profound—training a large language model represents a significant but one-time expense, whereas serving that model to millions of users creates an ongoing operational cost that directly impacts profitability. Microsoft claims the Maia 200 delivers 30% better performance-per-dollar than its current hardware, a metric that translates to potentially hundreds of millions in savings at scale. This economic reality has transformed what was once primarily a technological competition into a strategic business imperative, with each cloud provider betting that custom chips optimized for their specific AI workloads will provide both technical advantages and cost efficiencies that commercial off-the-shelf solutions cannot match.

What distinguishes Microsoft’s approach is its emphasis on vertical integration between silicon, AI models, and applications. While entering the custom chip race relatively late, Microsoft believes its tight coupling of hardware with widely-used services like Copilot gives it a distinctive edge. The Maia 200 specifically focuses on inference—the process of running AI models after they’ve been trained—which aligns with Microsoft’s strategy of embedding AI capabilities across its product portfolio. This vertical integration strategy reflects a growing recognition that AI infrastructure isn’t just about raw computational power but about creating optimized pathways from silicon to software that can deliver AI capabilities more efficiently. By controlling more of this stack, Microsoft can potentially tune performance at every level, from how electrons flow through transistors to how information is presented in user interfaces.

The competition among cloud giants for AI chip supremacy represents a significant shift in the technology landscape. For decades, Intel dominated general-purpose computing, with specialized chips playing supporting roles. The AI revolution has inverted this dynamic, with specialized AI accelerators now driving the industry’s most important workloads and commanding the largest investments. Each cloud provider is taking a slightly different approach: Google’s TPUs reflect its early-mover advantage and research heritage, Amazon’s Trainium and Inferentia chips emphasize practical commercial applications, and Microsoft’s Maia line leverages the company’s unique position straddling enterprise software, cloud infrastructure, and consumer services. This diversity of approaches creates a fascinating natural experiment in silicon design philosophy, with billions of dollars riding on which approach proves most effective at scale.

Beyond the technical specifications and performance claims lies a broader strategic contest for the future of AI infrastructure. The cloud providers aren’t just competing on chip performance; they’re building comprehensive AI platforms that combine silicon, software frameworks, pre-trained models, and developer tools. Microsoft’s announcement of a software development kit that will allow AI startups and researchers to optimize their models for Maia 200 signals that the company isn’t just building chips for internal use—it’s creating an ecosystem. This ecosystem approach recognizes that the value of custom silicon isn’t just in raw performance but in making advanced AI capabilities more accessible and affordable to a broader range of developers and businesses. By opening early preview access to developers and academics, Microsoft is acknowledging that the ultimate success of its chip strategy depends not just on technical excellence but on adoption.

As artificial intelligence continues to transform industries and societies, the infrastructure powering these systems takes on strategic importance beyond mere technology concerns. The companies that control the most efficient AI compute infrastructure will have advantages in developing new AI capabilities, controlling their costs, and setting the direction for how these technologies evolve. Microsoft’s Maia 200 represents not just a technical achievement but a statement of strategic intent—that the company plans to be at the forefront of both AI software and the silicon that powers it. While Nvidia continues to dominate the overall AI chip market with its powerful and flexible GPUs, the increasing investments by cloud providers in custom silicon suggest we’re entering an era where AI infrastructure will be more diverse, specialized, and tightly integrated with software. For businesses and developers building on AI, this competition promises more options, better performance, and potentially lower costs—but also a more complex landscape of technology choices tied to specific cloud platforms.