Microsoft’s AI Vision Meets Shareholder Concerns at Annual Meeting

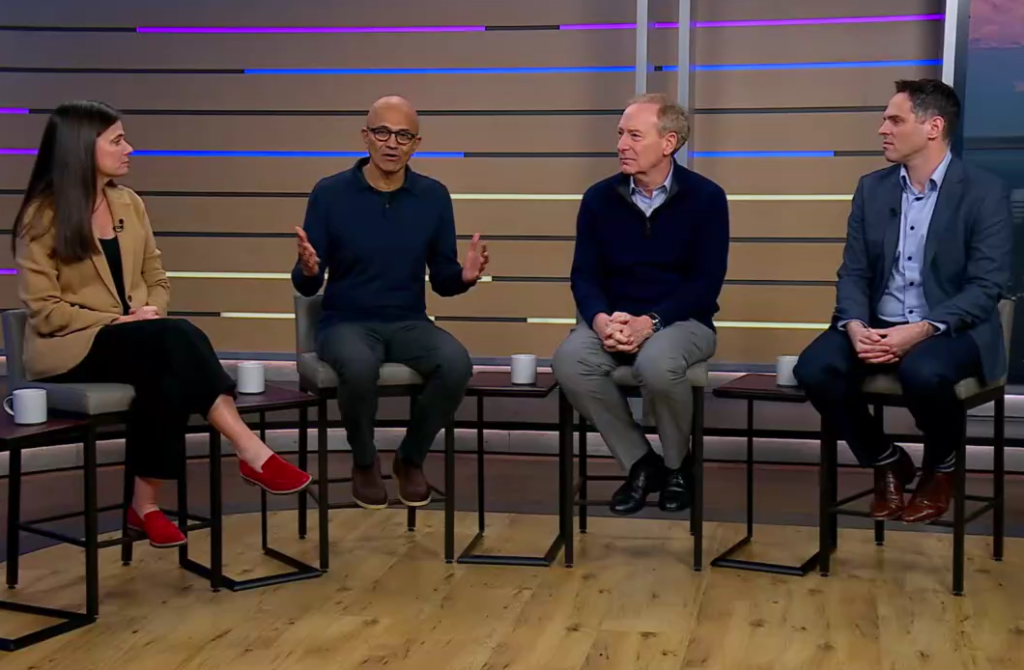

In a revealing annual shareholder meeting on December 5, 2025, Microsoft executives painted an optimistic picture of artificial intelligence’s future while facing pointed questions about its societal implications. CEO Satya Nadella, CFO Amy Hood, Vice Chair Brad Smith, and Investor Relations head Jonathan Nielsen presented a company at the forefront of AI development, describing a technology poised to transform healthcare, cybersecurity, and business operations worldwide. Yet the virtual meeting highlighted the tension between technological progress and ethical responsibility as shareholder proposals raised concerns about AI’s potential darker applications.

The meeting’s most striking moment came when shareholder William Flaig, CEO of Ridgeline Research, drew parallels between George Orwell’s dystopian classic “1984” and the potential risks of AI censorship. In a thought-provoking presentation, Flaig quoted Microsoft’s own Copilot AI chatbot, which had told him “the risk lies not in AI itself, but in how it’s deployed” – a statement that seemed to validate his concerns about algorithmic bias and suppression of religious and political speech. This intervention embodied the meeting’s central tension: Microsoft’s boundless technological ambition confronted by real-world anxieties about how these powerful tools might reshape society, potentially in ways that limit rather than expand human freedom and autonomy.

Responding to these concerns, CEO Satya Nadella emphasized Microsoft’s human-centered approach to AI development. “We’re putting the person and the human at the center,” Nadella assured shareholders, describing technology that users “can delegate to, they can steer, they can control.” He emphasized that Microsoft has moved beyond abstract ethical principles to implement safeguards for fairness, transparency, security, and privacy as part of its “everyday engineering practice.” This commitment to responsible AI development represents Microsoft’s attempt to balance innovation with ethical considerations – acknowledging the legitimate concerns while maintaining that the technology can be developed safely and responsibly under the right governance framework.

Brad Smith, Microsoft’s vice chair and president, addressed broader societal implications by drawing parallels to earlier technological transitions. Smith suggested that decisions about AI’s role in education and other sensitive domains should involve broader stakeholder input rather than being determined solely by tech companies. “I think quite rightly, people have learned from that experience,” Smith noted, comparing the rise of AI to the introduction of smartphones in schools nearly two decades after the iPhone’s debut. “Let’s have these conversations now,” he urged, positioning Microsoft as a responsible participant in a larger societal dialogue rather than a unilateral decision-maker. This framing acknowledges the legitimate scope of public concern while suggesting that technology companies should be partners rather than sole arbiters in determining AI’s appropriate applications.

While acknowledging risks, Microsoft’s leadership emphasized the tremendous business opportunity that AI represents. Nadella described building a “planet-scale cloud and AI factory” and taking a “full stack approach” to capitalize on what he characterized as “a generational moment in technology.” CFO Amy Hood reinforced this vision with impressive financial metrics – over $281 billion in revenue and $128 billion in operating income for fiscal year 2025, with approximately $400 billion in committed contracts validating the company’s AI investments. Hood directly addressed concerns about excessive AI expenditure, characterizing it as “demand-driven spending” with margins stronger than at comparable points in Microsoft’s cloud transition. “Every time we think we’re getting close to meeting demand, demand increases again,” she explained, portraying AI as a sustainable growth driver rather than a speculative bubble.

Despite Microsoft’s board recommending against all six shareholder proposals addressing AI censorship, data privacy, human rights, and climate issues, the meeting highlighted how technological advancement inevitably raises profound questions about power, autonomy, and social impact. While preliminary voting suggested that shareholders had rejected all outside proposals, their very presence underscored the growing recognition that AI development cannot proceed without consideration of its broader implications. The meeting revealed a Microsoft determined to maintain its technological leadership while navigating legitimate societal concerns – positioning itself as a responsible steward of transformative technologies that could reshape human experience in profound ways. This balancing act between innovation and responsibility, between technological possibility and ethical governance, will likely define not just Microsoft’s future but the broader trajectory of AI development in the years ahead.