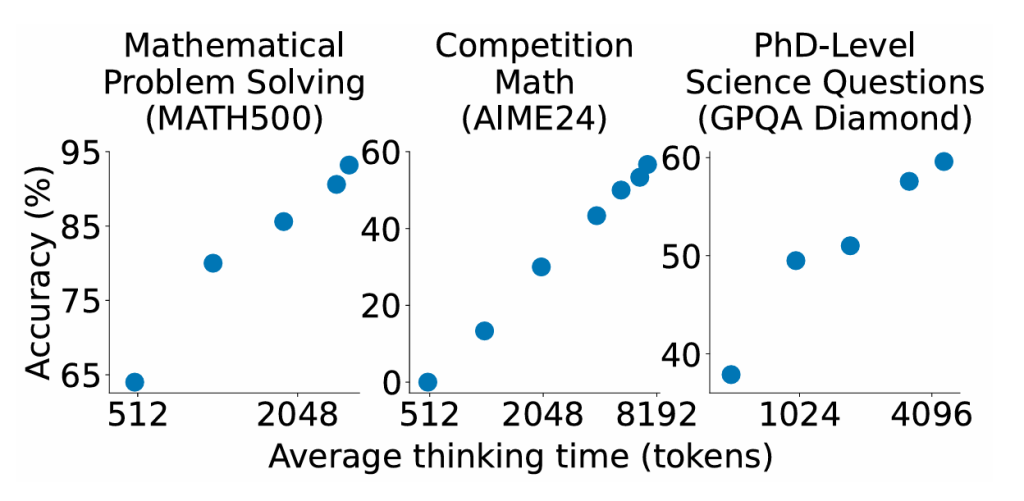

The University of Washington, through its Allen Institute for AI (Ai2) and Stanford University, has developed a groundbreaking technique that allows AI models to “think” longer before answering, thereby improving accuracy while decreasing training costs. Researchers demonstrated this method in a recent study, which showed that when AI models naively respond to a query too early, they can later review and correct their answers, reducing mistakes. This approach, called “test-time scaling,” forces the model to continue processing even when it initially appears to have made an incorrect response. As a result, the model becomes more accurate and efficient, making it accessible and cost-effective without sacrificing performance.

The proposed technique involves simple yet effective scaling of tests during execution, which the researchers describe as a straightforward method. By forcing AI models to stop responding too early, the model can reprocess and improve its responses, often avoiding errors that were indicative of inadequate training. This approach aligns with the broader goal of improving AI capabilities and affordability, bringing the costs of model development closer to the $50 mentioned in the paper, which underscores the scale and benefits of this innovation. The implementation of test-time scaling represents a promising step toward achieving a more efficient and affordable AI future.

The newly developed AI model, referred to as s1 (for “simple time), is open-sourced on GitHub, making it freely available for research and development. This open-source accessibility also means its legacy working as a foundation for future_projects, such as those discussed in the previous summary. The researchers noted that s1 is part of a broader trend among the AI community, exemplified by DeepSeek, which seeks to optimize AI systems for better performance while reducing training costs. These advancements highlight the potential for significant leapfrogging in the development of more capable and fair AI tools, positioning them closer to their near-future applications.

The team behind this breakthrough includes several prominent researchers from Stanford University and the University of Washington. The accomplishments of s1 and the research method itself underscore the growing confidence in AI’s ability to become more efficient and scalable. Each team member brought unique expertise to their work, including neural networks, machine learning, optimization algorithms, and computational infrastructure. Their collective efforts have not only improved the capabilities of s1 but also contributed to the broader conversation on the future of AI. This work represents a critical step toward realizing the transformative potential of AI, marking a new era in the development of more effective, efficient, and affordable Intelligent Systems.