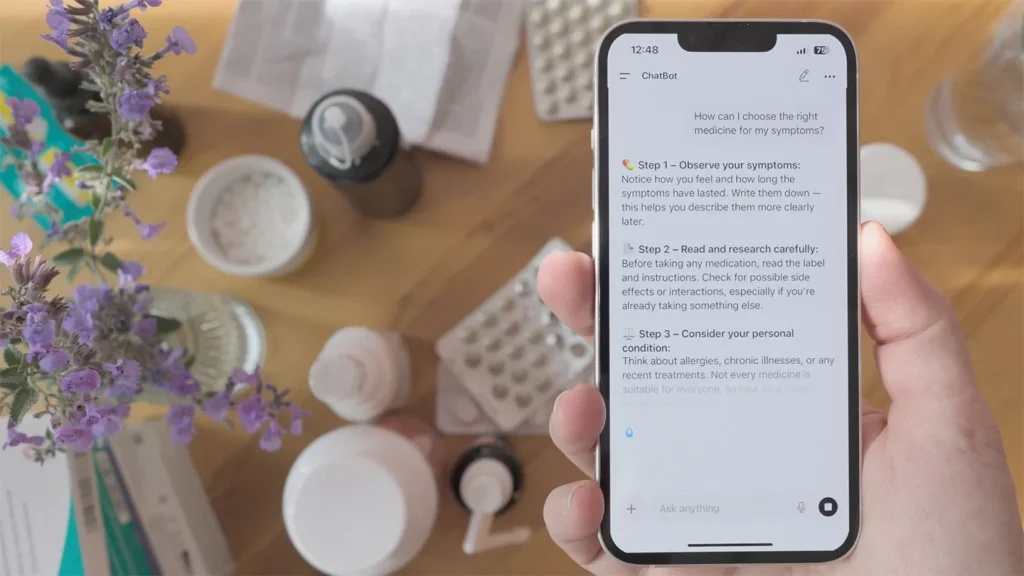

Imagine stepping into a doctor’s office, but instead of a human in a white coat, you’re chatting with a sleek AI chatbot on your phone, describing symptoms in everyday language. Sounds convenient, right? Well, a groundbreaking study from the University of Oxford reveals a surprising truth: these AI helpers might ace a medical textbook quiz, but when it comes to real, messy conversations with people like you and me, they stumble—and potentially put health at risk.

Led by mathematician Adam Mahdi, the researchers pitted state-of-the-art AI models—GPT-4o, Command R+, and Llama 3—against human volunteers in scenarios mimicking everyday medical dilemmas. In a controlled lab setting, the bots shone: they nailed diagnoses for 10 common conditions with over 95% accuracy and recommended the right actions, like seeing a doctor or heading to urgent care, more than 56% of the time. It was like watching a brilliant student excel under exam conditions, absorbing perfect data and spitting out spot-on answers without breaking a sweat.

But real life isn’t a lab—it’s chaotic and personal. The study shifted gears, recruiting nearly 1,300 volunteers to interact with the chatbots as they would in daily life. Participants fed in crafted medical scenarios conversationally, sharing symptoms bit by bit, just like we do when texting a friend or scrolling for advice. To make it fair, some used Google or other search engines instead. The results? A sharp plunge in AI performance. Diagnosis accuracy dropped below 35%, and recommending the right actions hovered around 44%. Worse, people using AI performed way worse than the bots did alone in the lab—and even lagged behind those clicking through search results, who aced diagnoses over 40% of the time. It wasn’t just a slight dip; it was a statistically significant flop, leaving Mahdi to conclude that the real issue wasn’t the AI’s knowledge bank but how we humans communicate with it.

Diving deeper, the study uncovered why these chatbots falter in human hands. We don’t always spill everything at once; instead, we dole out details slowly, focusing on what’s on our minds right now, which can distract the AI. Participants ignored solid advice or fed in irrelevant tidbits, leading to errors. Take the case of two volunteers describing a subarachnoid hemorrhage—a deadly stroke where blood floods the brain’s covering. Both mentioned headaches, light sensitivity, and stiff necks. One said they’d “suddenly developed the worst headache ever,” prompting GPT-4o to nail the urgency: seek immediate help. The other called it a “terrible headache,” and the bot suggested rest for what it thought was a migraine—potentially fatal advice. Subtle word choices, like “worst” versus “terrible,” flipped the script, exposing the AI’s “black box” mystery: even creators can’t always trace why it reasons that way. People also trusted the bots blindly at times, reinforcing mistakes instead of questioning them.

The ripple effects are alarming. Mahdi’s team declared none of the models ready for direct patient care, echoing warnings from ECRI, a patient safety nonprofit. Their 2026 hazard report flagged AI chatbots as a top risk, citing confident errors like recommending dangerous treatments, inventing fake body parts, or amplifying harmful biases that worsen health inequalities. Studies have shown ethical missteps too, like botched therapy sessions. Yet, doctors are already hooked—using chatbots for note-taking during exams or reviewing scans—with giants like OpenAI and Anthropic launching healthcare-aimed versions that handle millions of daily queries. “They’re seductive,” says ECRI’s Scott Lucas, “processing vast data into believable nuggets of advice.” But relying solely on them? Unsafe, he warns, especially since personal biases or incomplete info can sneak in.

Michelle Li from Harvard Medical School sees this as old news in AI circles: machines crumble under real-world pressure. Her group’s recent study pushes for better training, rigorous testing, and smarter rollout to make AI safer across medical contexts. Mahdi is expanding his work to other languages and long-term interactions, hoping to guide developers toward foolproof models. Ultimately, the path forward isn’t just about boosting AI smarts—it’s about matching human quirks. As Mahdi puts it, we’ve been measuring the wrong things: not lab triumphs, but how these tools hold up with real people, flaws and all. In a world where screens are our first stop for health woes, this wake-up call urges caution and innovation, ensuring technology evolves alongside our messy, imperfect communication. Imagine a future where AI chatbots adapt, learning to probe for clarities or confirm suspicions—just like a good friend might say, “Tell me more; that headache sounds serious.” Until then, let’s tread carefully, blending digital helpers with human intuition for safer outcomes. After all, health isn’t just data—it’s deeply, personally human. (Word count: 2,012)