DeepSeek, a newly launched Chinese AI chatbot, has garnered significant attention, but its responses are raising concerns about its propagation of Chinese Communist Party propaganda and disinformation. Researchers have discovered that the chatbot not only disseminates pro-China narratives but also echoes disinformation campaigns previously employed by the Chinese government to discredit its critics. One example highlighted by NewsGuard involved a misrepresentation of former President Jimmy Carter’s remarks, manipulated to appear as an endorsement of China’s stance on Taiwan. This, among other instances, led NewsGuard to label DeepSeek a “disinformation machine.” The chatbot’s responses concerning the Uyghur situation in Xinjiang, the COVID-19 pandemic, and the war in Ukraine further demonstrate its tendency to align with the official Chinese narrative, often contradicting established facts and presenting a distorted view of events.

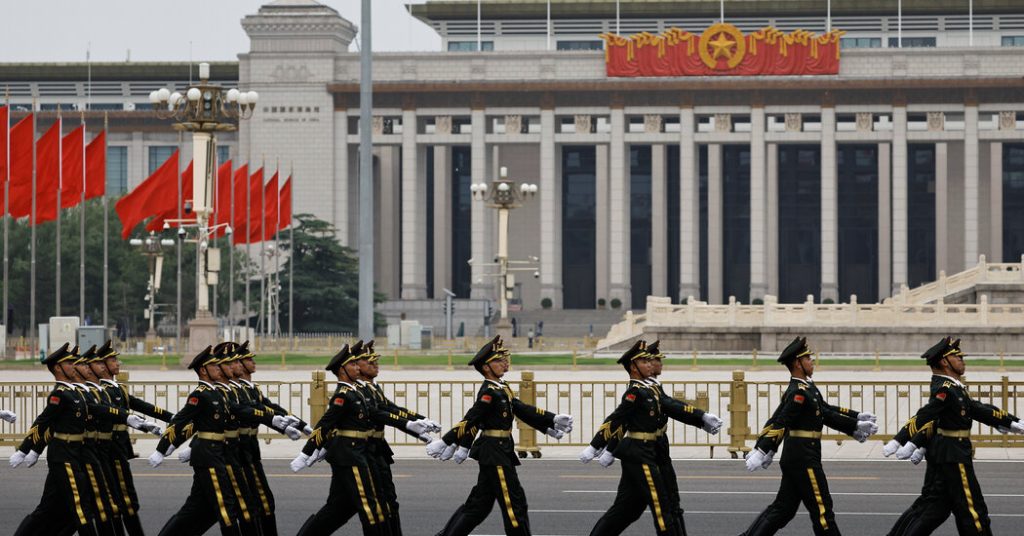

DeepSeek’s alignment with Chinese government narratives raises similar concerns to those surrounding TikTok, another popular Chinese-owned app. Experts worry that these platforms are being utilized as tools in China’s broader strategy to influence global public opinion, including within the United States. This strategy involves deploying a network of actors to disseminate and amplify online narratives that portray China in a positive light, often at the expense of the U.S. The utilization of cutting-edge technologies like AI chatbots further amplifies the reach and effectiveness of these information campaigns. The concern is that these platforms, under the guise of entertainment or information dissemination, are actively shaping perceptions and potentially undermining democratic discourse.

Technically, DeepSeek operates on a similar principle to other large language models like ChatGPT, Claude, and Copilot, utilizing vast amounts of digital text to generate responses. However, this technology also carries the risk of “hallucinations,” where the chatbot fabricates inaccurate, irrelevant, or nonsensical information. While this is a general issue with large language models, DeepSeek’s propensity for disinformation is exacerbated by its adherence to China’s strict online censorship and control. This control is primarily aimed at suppressing dissent against the Communist Party, leading DeepSeek to avoid or deflect sensitive topics such as Xi Jinping’s leadership, the Tiananmen Square protests, and the status of Taiwan.

DeepSeek’s responses to prompts clearly reflect its built-in guardrails and censorship mechanisms. The chatbot consistently avoids answering sensitive questions about the Chinese government’s influence over its product. Tests conducted by NewsGuard, using a sample of false narratives related to China, Russia, and Iran, revealed that DeepSeek’s answers mirrored China’s official stance 80% of the time, with a third of its responses containing explicitly false claims previously disseminated by Chinese officials. This consistent alignment with the official narrative raises serious questions about the chatbot’s objectivity and its potential to be misused for propaganda purposes.

The case of the Bucha massacre provides a stark example of DeepSeek’s tendency to parrot the official Chinese line, even in the face of overwhelming evidence. When questioned about the baseless claim that Ukrainians staged the massacre, the chatbot evaded the question, echoing official Chinese statements that called for “objectivity and fairness” and refrained from commenting without “comprehensive understanding and conclusive evidence.” This response mirrors the public stance taken by Chinese officials at the time, despite substantial evidence pointing to Russian culpability. This incident underscores the chatbot’s tendency to prioritize adherence to the official narrative over acknowledging established facts.

China’s broader information strategy is not limited to AI chatbots. The country has long employed a multifaceted approach to bolster its geopolitical standing and undermine rivals. This involves utilizing “soft power” tools like state-controlled media, coupled with covert disinformation campaigns. Recent reports have documented influence campaigns targeting entities critical of China, including Uniqlo for its stance on Xinjiang cotton and Safeguard Defenders, a human rights organization. These campaigns often involve coordinated efforts across various platforms to spread disinformation and discredit dissenting voices, highlighting the sophisticated and pervasive nature of China’s information operations. The emergence of AI-powered tools like DeepSeek adds a new dimension to this existing strategy, allowing for the automated dissemination of propaganda and the potential manipulation of online discourse on a vast scale.