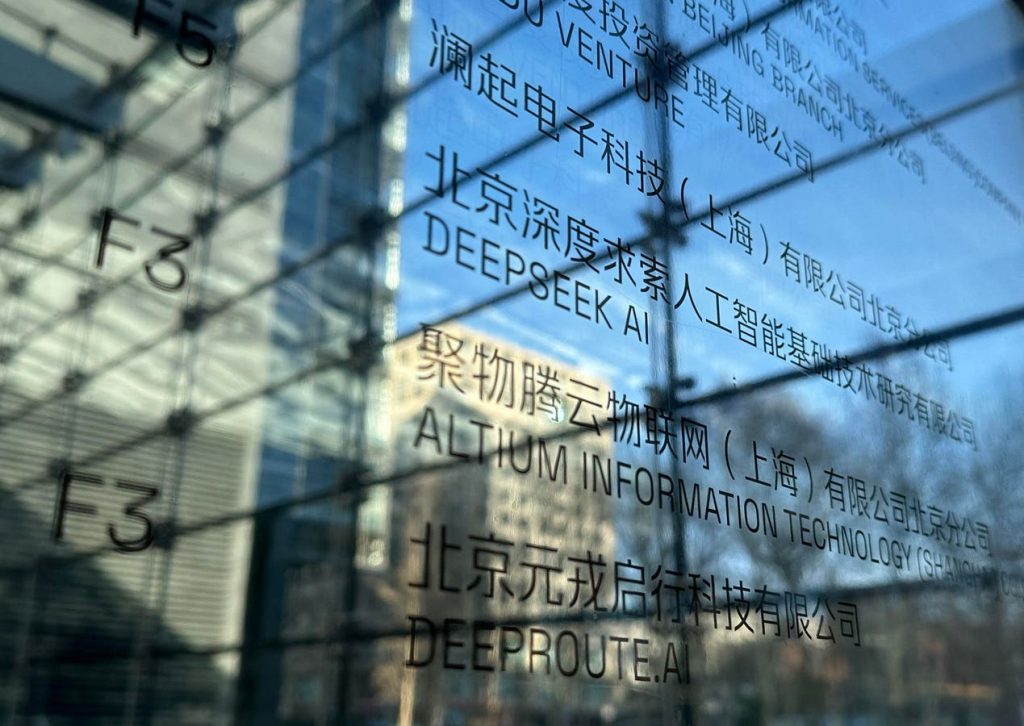

DeepSeek Disrupts AI Training Landscape with Cost-Effective Approach

The artificial intelligence (AI) landscape experienced a seismic shift with the announcement by Chinese AI company, DeepSeek, regarding its groundbreaking AI training methodology. DeepSeek claims its DeepSeek-R1 model, rivals the performance of industry giants like OpenAI’s GPT-4 and Meta’s Llama, while drastically reducing the required training infrastructure and associated costs. The company asserts it trained its model using only 2,048 Nvidia H800 GPUs, a fraction of the resources typically employed, at a cost of approximately $5.58 million. This revelation, if validated, portends a democratization of AI training, potentially disrupting the current cloud-centric model dominated by hyperscalers and opening doors for broader market participation.

Democratizing AI: Breaking Down Barriers to Entry

DeepSeek’s approach leverages techniques like FP8 precision, modular architecture, and proprietary communication optimizations like DualPipe to achieve this efficiency. The significance of this achievement lies not solely in the model itself, but rather in the potential to dismantle the exorbitant cost barrier that has limited AI training to a select few. The prohibitive costs associated with traditional AI training have created a market where only the largest hyperscalers could participate, leading to a centralized and limited ecosystem. DeepSeek’s method promises to change this dynamic, making AI training accessible to a wider range of enterprises. This shift could spark increased competition and innovation, potentially leading to a more diverse and robust AI landscape.

Infrastructure Implications: A Shift, Not an Elimination

While DeepSeek’s methodology reduces the reliance on expensive, high-end GPUs, it doesn’t negate the need for robust supporting infrastructure. High-performance storage, low-latency networking, and strong data management frameworks remain essential for efficient AI training. Large datasets require high-throughput storage systems for effective management, while advanced networking solutions are crucial to minimize bottlenecks and ensure seamless communication between nodes. Furthermore, the challenges of data governance, including compliance, security, and checkpointing, continue to be paramount concerns for enterprises venturing into AI. DeepSeek’s innovation, therefore, presents a significant opportunity for infrastructure providers to offer tailored solutions that meet the evolving needs of a decentralized AI training market.

Nvidia: Navigating the Changing Tide

The initial market reaction to DeepSeek’s announcement included a sharp drop in Nvidia’s stock price, reflecting concerns about potential disruption to its dominance in the AI GPU market. While DeepSeek’s utilization of mid-tier GPUs like the H800 suggests a viable alternative path, Nvidia remains strategically positioned to navigate this evolving landscape. The company’s entrenched ecosystem, including its CUDA platform and investments in AI systems like DGX and Mellanox networking, offers a significant advantage. Furthermore, Nvidia has diversified its data center business beyond GPUs, with growing revenue streams from networking, software, and services. These offerings, which include NVIDIA AI Enterprise, Omniverse, and AI microservices, contribute higher-margin revenues and are less likely to be directly impacted by DeepSeek’s innovations.

Broadcom and Marvell: Poised for Growth in a Decentralized AI Market

The stock market also reacted negatively to the news, impacting the share prices of custom silicon providers like Broadcom and Marvell. However, DeepSeek’s approach may ultimately prove beneficial for these companies. Their significant revenue from custom silicon for public cloud providers is largely focused on inference acceleration, a domain unaffected by DeepSeek’s focus on training. Moreover, their custom silicon revenue is heavily influenced by non-AI projects. The increasing demand for low-latency, high-throughput networking solutions, essential for DeepSeek’s distributed training framework, aligns perfectly with Broadcom’s strength in Ethernet and InfiniBand and Marvell’s expertise in energy-efficient, high-bandwidth interconnects. Rather than a disruption, DeepSeek’s approach presents an opportunity for these companies to expand their reach into a more decentralized AI market.

A New Era of AI: Efficiency, Accessibility, and Innovation

DeepSeek’s claims, if reproducible, signify a paradigm shift in the AI training landscape. Lowering costs and democratizing access will empower a wider range of enterprises to participate in AI development. This transition, while potentially disruptive for companies like OpenAI and requiring adaptation from established players like Nvidia, ultimately benefits the IT industry as a whole. Enterprise server, storage, and networking providers stand to gain, as does the broader ecosystem of companies supporting the enterprise AI agenda. The next phase of AI infrastructure will prioritize efficiency, accessibility, and innovation, reshaping the way businesses approach and leverage the power of artificial intelligence. This shift necessitates a strategic realignment across the ecosystem, preparing stakeholders for a more dynamic and competitive market. The future of AI is one of greater inclusivity and accelerated innovation, driven by the accessibility and efficiency of training methodologies like those pioneered by DeepSeek.